Shippromptsthat don't break in production

Test, compare, and version prompts instantly in a shared workspace.

No API keys required. No more spreadsheets.

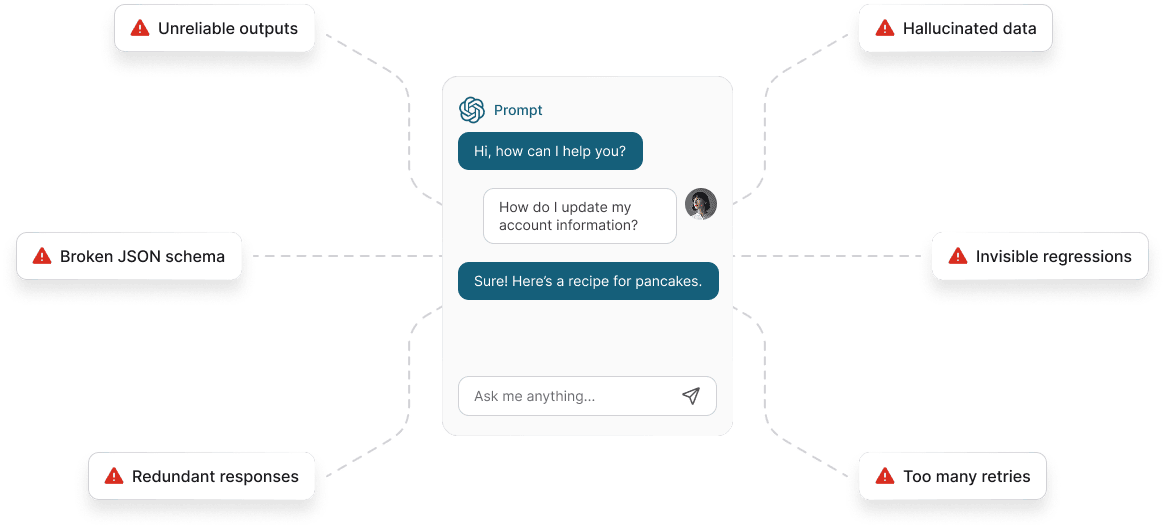

Your prompts keep breaking.

And every fix breaks something else.

If shipping AI features feels like firefighting, you’re not alone.

Meet LangFast

The LLM evaluation platform for product teams to prototype, test and ship robust AI features 10x faster.

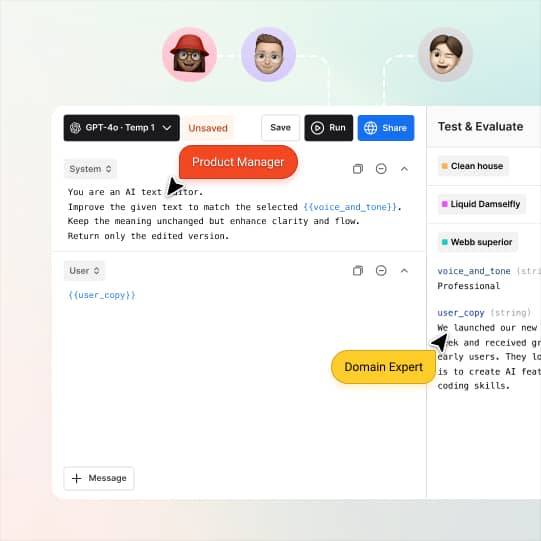

Prompt with experts

Building good AI starts with understanding your users — that’s why subject matter experts make the best prompt engineers.

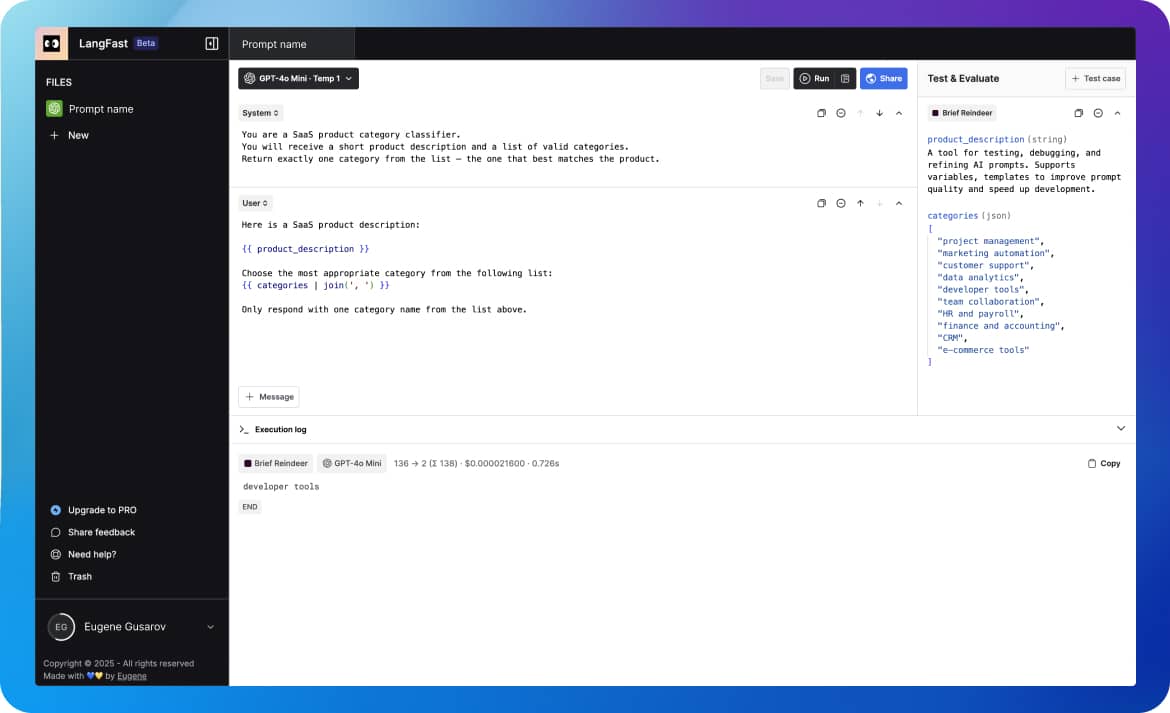

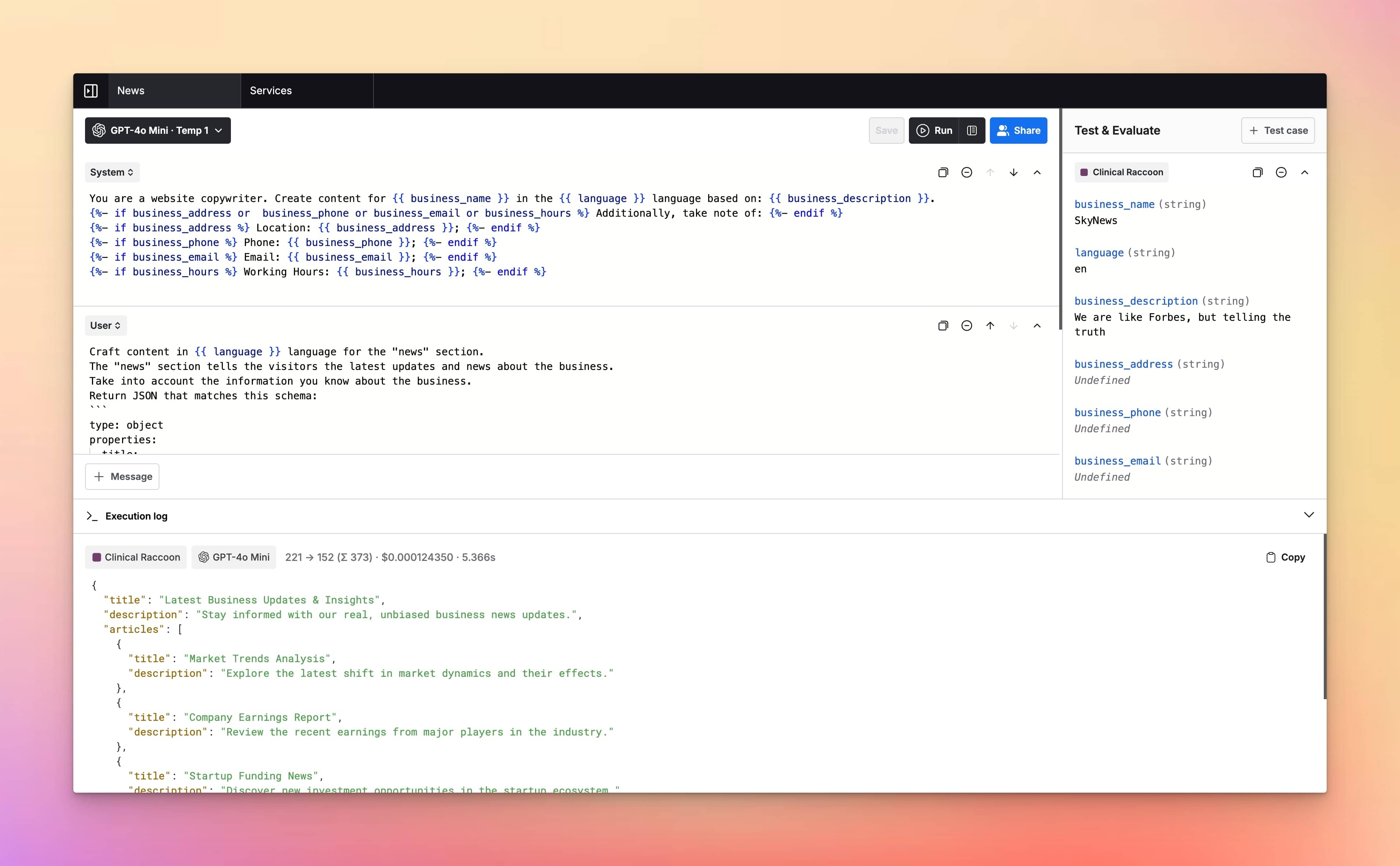

Prompt like a Pro

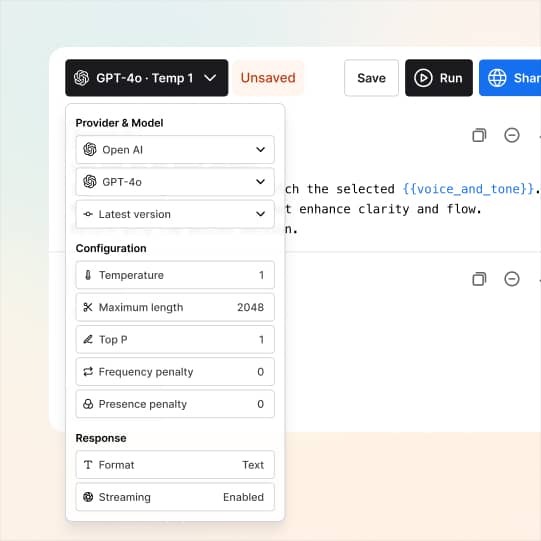

Reduce the hassle of prompt prototyping. Our best-in-class AI playground makes the process faster, saving you time and effort in designing prompts.

Evaluate iteratively

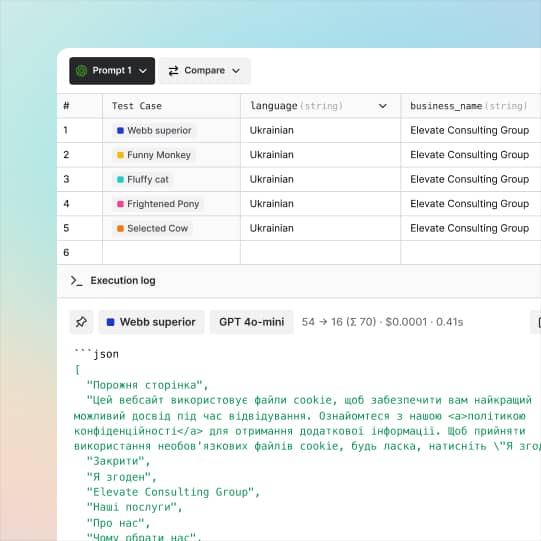

Thoroughly validate your prompts before deployment — combining human insight with AI precision.

Meet LangFast users

LangFast empowers hundreds of people to test and iterate on their prompts faster.

Predictable, volume-based pricing

Frequently Asked Questions

What is LangFast?

LangFast is an online LLM playground for rapid testing and evaluation of prompts. You can run prompt tests across multiple models, compare responses side-by-side, debug results, and iterate on prompts with no setup or API keys required.

How does LangFast work?

Type a prompt and stream a response, then switch or compare models side-by-side; you can save/share a link, use Jinja2 templates or variables, and create as many test cases as you want.

Which large language models can I try on LangFast?

Currently LangFast is limited to OpenAI/GPT models only. If you need access to models from other providers, just let us know, and we'll add them.

Do I need an API key?

No. You can start using LangFast immediately. Keys are optional for power users.

How fast is LangFast?

We stream tokens through a tiny proxy layer to ensure you can use it without your own API keys. Typical first token time is low fraction of a second. Speed varies by model/load.

What's the context window?

Depends on the model (e.g., 8K–200K tokens). We show it next to each model.

Can I upload files or images?

Yes, as long as they are supported by the model itself.

Can I compare models side-by-side?

Yes. You can open as many chat tabs as you want to see multiple models answer the same prompt.

Can I save or share chats?

Yes. Use "Share" button to manage sharing permissions. You can create public URLs or share access with specific email addresses.

Where is my data processed?

We route to model providers; see the Data & Privacy page for regions and details.

Can I use outputs commercially?

Generally yes, subject to each model's terms. We link those on the model picker.

Can I bring my own API keys?

Yes, you can. Just let us know, and we'll add them to your workspace.

Does LangFast have an API?

Yes. Reach out to us to get more information.

What's the difference between LangFast and paid LLM APIs?

LangFast is point-and-click for quick evaluation, while paid LLM APIs provide programmatic control, higher throughput, predictable limits, and SLAs for production. Use LangFast to find the right prompt-to-model setup, then ship with APIs.

How does LangFast compare to OpenAI Playground or Hugging Face Spaces?

LangFast focuses on instant multi-provider testing (no keys to start), consistent UI, side-by-side comparisons, share links, exports in one place, offering a streamlined alternative to OpenAI Playground and Hugging Face Spaces.